May 7, 2023

Aditya

Gaur

Current State of AI in Cybersecurity

The big discussion: When OpenAI announced ChatGPT, it was all the rage, becoming the fastest consumer software to reach 100 million users in two months. ChatGPT, Microsoft's Bing (essentially a better ChatGPT), and Google's Bard—the Generative AI giants—are together changing the landscape with a new use case popping up every hour. These AI models have taken artificial intelligence's ease of use and accuracy to a new level and unleashed all of its hyper-productive and creative use cases in the public.

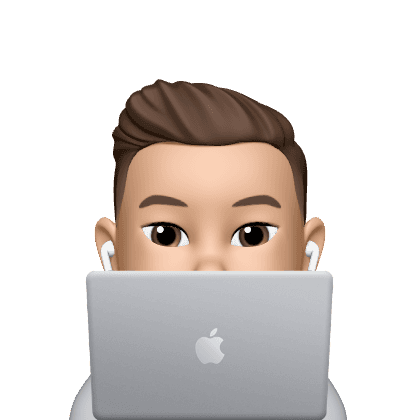

OpenAI then announced GPT-4 —and, of course, a GPT-4 powered version of ChatGPT— in March 2023, bringing the next revolution in generative AI to the audience. GPT-4 is so far ahead of GPT-3 that calling it "GPT-3 on steroids" is an understatement. OpenAI's research team put the LLMs head to head across multiple academic, technical, and reasoning-based tests like the GRE, SAT, LSAT, Uniform Bar Exam, etc. The results speak for themselves.

What's the problem?

The incredible thing is AI models have become so enhanced that they can essentially replace human beings in various tasks —this saves resources that can now be deployed in strategically essential missions. But, the bad thing is precisely the preliminary result. Since AI models have become so good at replicating human efforts and work, they open up opportunities for bad actors to use them for the worse. The real question is, "What does that mean for cybersecurity?"

Generative AI and other AI products have already been shown to be highly productive in automating and optimizing an array of processes that would require hours of manual work; it is essential to understand the two contrasting aspects this technology presents for modern enterprises. With each update, AI models become more innovative, faster, and more accurate in the information they produce and the reasoning they give (AGI? 👀).

However, this productivity and super-fast creativity come at a cost. Cybercriminals, increasingly looking to use AI to their advantage, can exploit the same features that make AI invaluable for users. Cybercriminals also have access to the same state-of-the-art AI technology that security professionals use to develop advanced attacks and bypass even the most robust security measures. With the growing sophistication of AI systems, we now face an unprecedented challenge.

Why this matters?

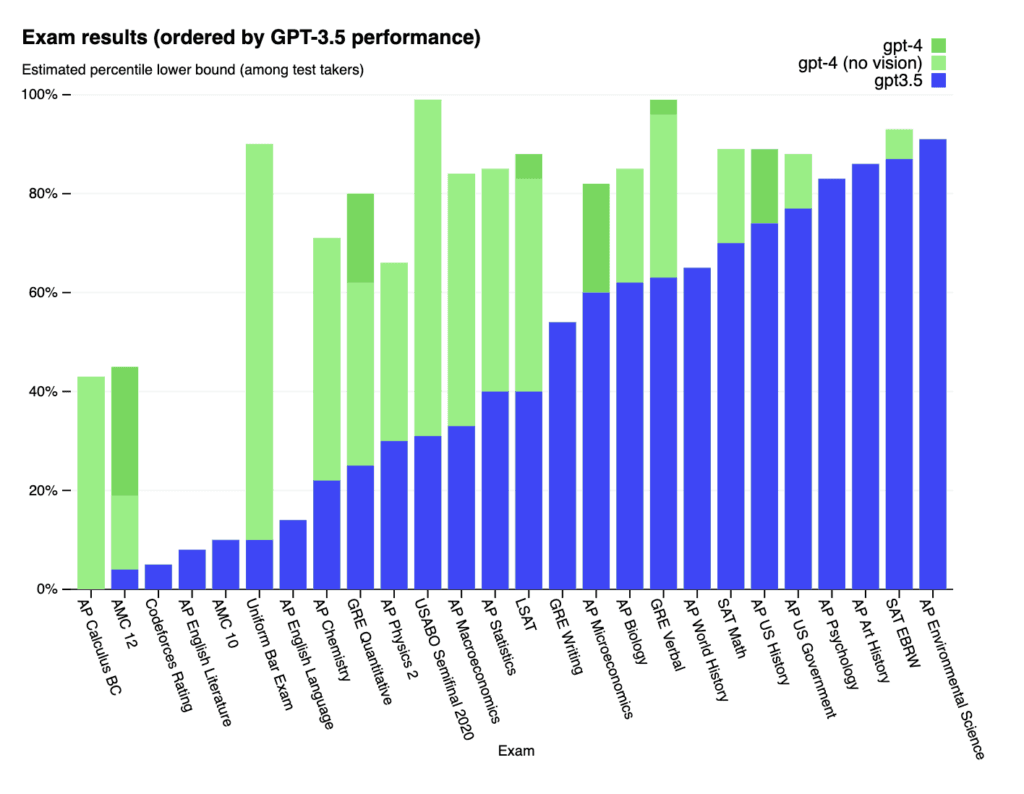

Cybersecurity, a field already reeling from talent shortages and an increasing number of cyberattacks, must reevaluate how it deals with AI-enabled, increasingly sophisticated threats.

Compared to last year, cybersecurity has seen talent catch up, but the gap remains at 54%. Despite improvement, the skills gap still stands at about 34% in cybersecurity—a critical issue that can be addressed by infusing AI with talent and existing skills. Source: World Economic Forum, Global Cybersecurity Outlook 2023

This raises concerns about the current state of cybersecurity tools which need to be adapted and updated to address the risks posed by AI. The following sections will explore the most critical risks AI poses to enterprises' cybersecurity. And in another blog article, we will also look at how they can be addressed at scale.

The Risks of AI

AI-Enabled Phishing

ChatGPT, Bard, and Claude are the most advanced generative-text AI models. They can generate text based on complex prompts and reasoning arguments, read a given text, and write like humans. Their ability to chat seamlessly without spelling, grammatical, and verb tense mistakes —or sometimes intentional errors to sound more human— makes them seem natural. This makes this revolutionary tech a game-changer for attackers.

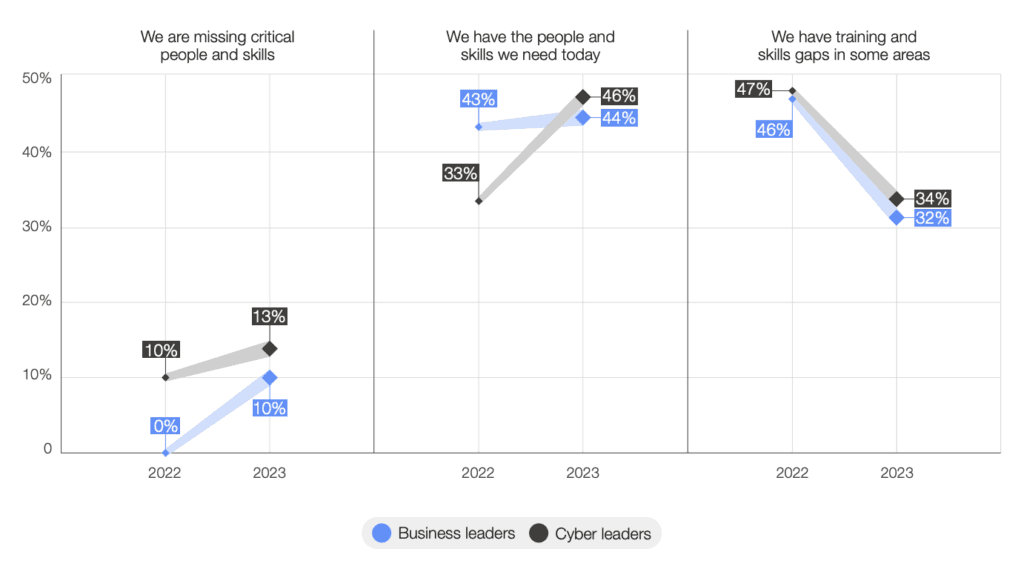

The FBI's 2021 Internet Crime Report found that phishing is the most common security threat for enterprises and individuals. Although most phishing attacks are not sophisticated enough —due to misspellings, poor grammar, and generally awkward phrasing— ChatGPT can remove the language barrier by helping attackers create high-intent, convincing messaging that can be hard to differentiate from a natural person's email.

Per the FBI's Internet Crime Report, Phishing is the biggest threat to organization-wide cybersecurity.

For cybersecurity leaders, this requires immediate attention and action. For starters, leaders need to equip their teams with tools to differentiate between AI-generated and human-generated text, especially regarding emails from outside the organization. But, as humans, it's our nature to miss out on things during our busy schedules. These tools must be orchestrated and automated to make things even more secure and remove the "human avoidance risk."

Malicious Code Attack (Malware)

This attack can be carried out by injecting AI-generated malicious code into the existing code base of a product or a tool/repository an organization uses internally. We all know how good ChatGPT is at generating code in any programming language. Attackers can leverage this automated code-generation capability to create malicious scripts to steal user information or plug this code into emails that the receiver can mistakenly download onto their local devices.

“ChatGPT is proficient at generating code and other computer programming tools, but the AI is programmed not to generate code that it deems to be malicious or intended for hacking purposes.” —HBR.

Yet, ChatGPT and other AI models can also be engineered to generate malicious code. TechTarget wrote about how hackers can use ChatGPT to create malware.

Advanced Persistent Threats (APTs)

APT is a sophisticated, sustained attack when an attack vector enters a network undetected and stays there for a long time to steal sensitive data. The infusion of AI into APTs has made these attacks even more complex and, thus, hard to detect. Attackers can leverage generative AI to create compelling phishing campaigns to introduce malicious vectors into an organization's network and exploit its vulnerabilities.

Deepfake attacks

These employ artificial intelligence-generated synthetic media, such as videos or images, to impersonate real people and carry out fraudulent campaigns. Attackers can create convincing media and share it with vulnerable users in an organization to get access to private data or secret credentials. Many tools have already been built on top of generative AI models, such as Dall-E and Midjourney, to enable a broader audience to create engaging visual content —images and videos— in minutes. Adversaries can also leverage these tools to deploy deepfake-enabled social engineering attacks.

With the help of deep fakes, fraudsters can orchestrate social engineering attacks that appear to come from a friend or colleague, someone we know and trust and whose motives do not need to be questioned. —TripWire

Over-reliance on AI

As generative AI continues to upend different aspects of modern enterprises, its potential exploitation by attackers becomes an ever-growing concern. Malicious actors can harness AI to launch sophisticated cyberattacks, employing generative models to craft highly targeted phishing campaigns, convincingly imitate legitimate communication, and intelligently exploit system vulnerabilities. These upgrades in attack vector complexity make it increasingly difficult for organizations to identify and mitigate threats, potentially exposing them to prolonged, undetected data breaches.

What's next?

The conversations about AI —the good and the bad— have become social fodder. As AI models become more imaginative, it is hyper-critical that organizations and leaders start pondering what this means for their teams, products, data, and workflows. The risk posed by AI, especially Generative-AI, can be easy to neglect because all we see or hear about this unique technology is how it will change the paradigm of work and how it can steal millions of jobs. Yet, it's the most critical need for a business of any size. Otherwise, it will be bested not only by cyber attackers and hackers but also by its competitors.

Enterprises must remain vigilant and adapt their cybersecurity strategies to address the mounting risks posed by AI-enabled attackers. By doing so, they can better safeguard their sensitive data and maintain a robust security posture in the face of evolving threats.

Read more:

We recently wrote about the 5 pains Generative AI will solve in cybersecurity. You can read it here.